Featured Projects

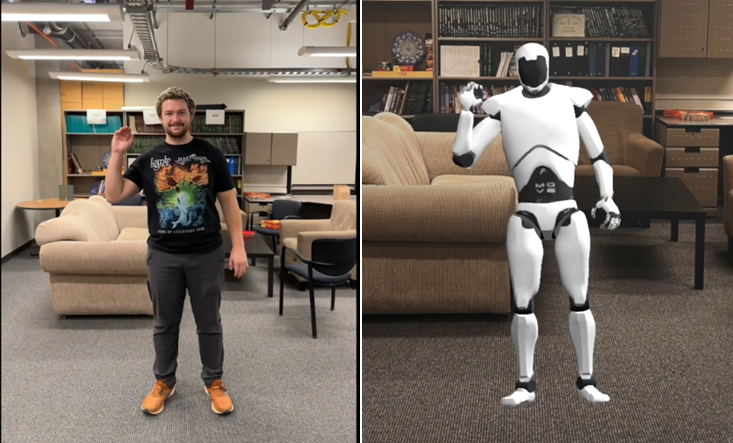

Real-time Avatar:

To redefine digital interactions with lifelike avatars, enhancing VR, gaming, and remote collaboration, we are using LiDAR technology to capture, analyze, and transform human movement into digital form. Potential applications of this new research direction:

✤Expand integration into VR, gaming, and remote collaboration applications

✤Increase motion capture precision & responsiveness

✤Refine avatars for realistic, lifelike motion

✤Capture and process avatars in real-time (a real challenge)

https://www.youtube.com/watch?v=fsJkoVnhf2o&list=PLPJ3KHz09Fj3qdhSZUkWs71eJF_osf35N&index=2

Real-time Multi-party Interaction:

Virtual reality is reshaping how we connect across distances, enabling immersive shared experiences. This project connects two VR headsets via the Internet, allowing users to see each other and engage in real-time activities together. By creating a shared virtual space, we bring a sense of presence and interaction that feels close to reality, opening up new possibilities for collaboration, play, and exploration. Potential applications of this new research direction:

✤Remote Team Collaboration

✤Virtual Social Gatherings

✤Interactive Gaming

https://www.youtube.com/watch?v=ILvXwBnn3Ok&list=PLPJ3KHz09Fj3qdhSZUkWs71eJF_osf35N&index=2

ETHEREAL Project:

Approximately one-third of individuals with autism are nonspeaking: They cannot communicate effectively using speech. Under the lead of Dr. Diwakar Krishnamurthy, we develop the ETHEREAL project to provide nonspeaking autistics an augmented-reality (AR) way to communicate and to learn. Our applications were developed after extensive consultation with the nonspeaking community (nonspeakers who have learned to communicate by typing, their communication partners, parents, and occupational therapists).

https://www.youtube.com/watch?v=UiPhZfA71kg&t=21s